IOTSC Postgraduate Forum: Intelligent Transportation

智慧城市物聯網研究生論壇: 智能交通

Dear Colleagues and Students,

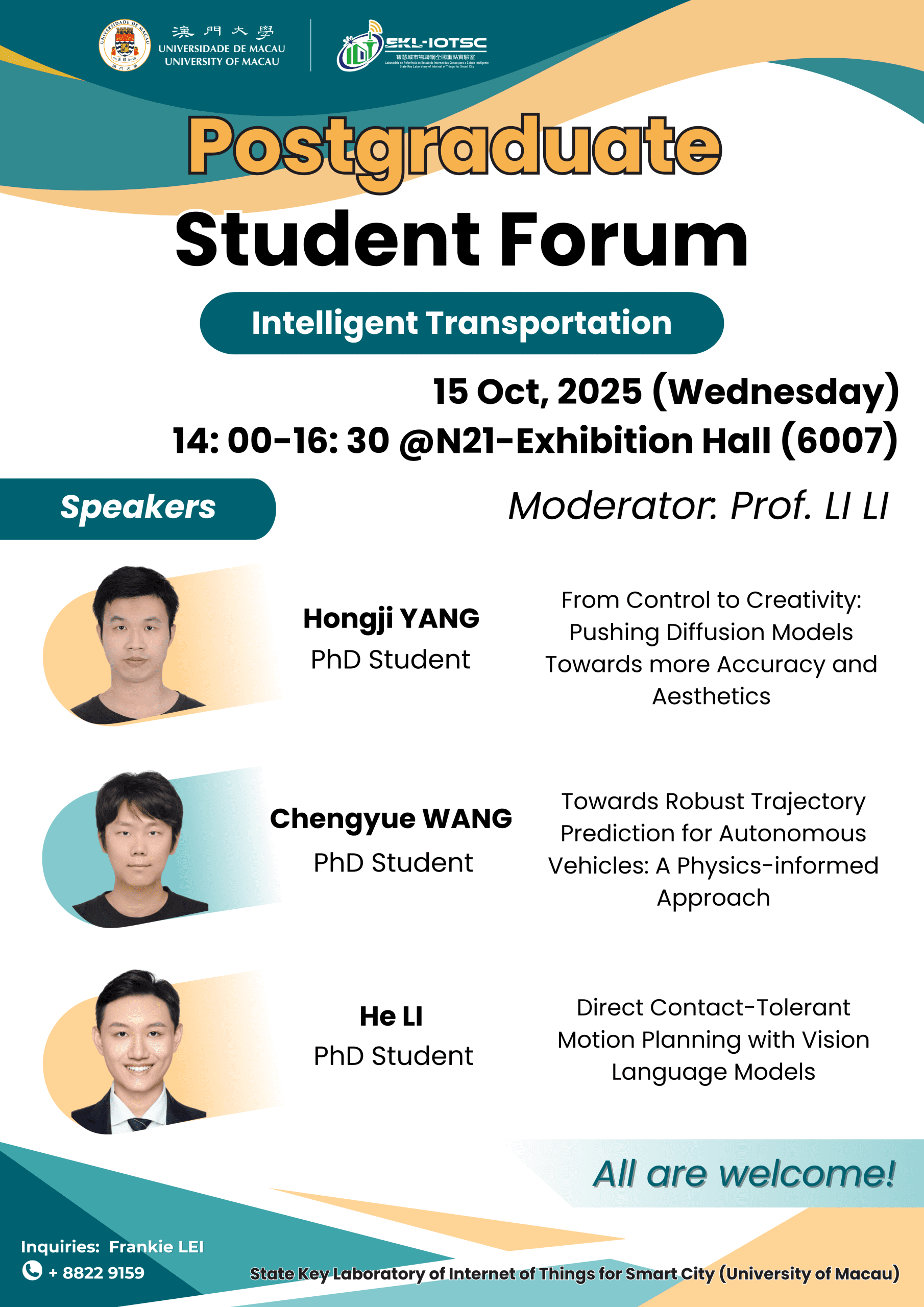

The State Key Laboratory of Internet of Things for Smart City would like to invite you to join our “IOTSC Postgraduate Forum” on 15/10/2025 (Wednesday). The event aims to bring together postgraduate students from various disciplines to share their research, exchange ideas, and engage in meaningful discussions. We are pleased to invite three outstanding PhD students to give presentations related to Intelligent Transportation.

IOTSC Postgraduate Forum: Intelligent Transportation

Date: 15/10/2025 (Wednesday)

Time: 14:00 – 16:30

Language: English

Venue: N21-6007 (Exhibition Hall)

Moderator: Prof. LI LI

| Presenters | Abstract |

| Hongji YANG |

From Control to Creativity: Pushing Diffusion Models Towards more Accuracy and Aesthetics Diffusion models have demonstrated remarkable capabilities in text-to-image generation, yet challenges remain in achieving both precise control and alignment with human preferences. Our research aims to make diffusion models not only more powerful but also more controllable. One part of our work explores how large vision-language models (LVLMs) can guide diffusion models to better leverage LVLM image understand abilities, enabling diffusion model to generate images that more align with human preference. Instead of laborious human feedback, the prior knowledge of the LVLM can be used to provide rewards for images, which leads to better image generation. Another part focuses on controllable image generation: we design new methods that allow diffusion models to follow given conditions with much greater precision, supporting fine-grained manipulation of objects, layouts, and their relationships. Together, these efforts push diffusion models towards a more powerful, accurate, and flexible image generative model. |

| Chengyue WANG |

Towards Robust Trajectory Prediction for Autonomous Vehicles: A Physics-informed Approach The coexistence of human-driven and autonomous vehicles (AVs) in mixed traffic environments presents substantial challenges for trajectory prediction, which is the cornerstone of safe and reliable autonomous driving. To address this, we propose a novel physics-informed deep learning framework that equips AVs with robust trajectory prediction capabilities. The central goal of our framework is to enable AVs to generate trajectories that are robust to data sparsity and noise, physically feasible, and consistent with real-world driving behaviors. Our framework integrates interdisciplinary insights from vehicle dynamics, kinematics, network science, and cognitive science as physics-informed priors. By embedding these physics-inspired insights into deep learning architectures, the framework achieves robust, realistic, and interpretable trajectory prediction for AVs in diverse traffic environments. To evaluate our framework, we conduct extensive real-world experiments under long tail scenarios, imperfect perception conditions, and physical feasibility constraints. Our findings indicate that the proposed framework can provide efficient, robust, and physically feasible performance for trajectory prediction in autonomous driving system. |

| He LI |

Direct Contact-Tolerant Motion Planning With Vision Language Models Navigation in cluttered environments often requires robots to tolerate contact with movable objects to maintain efficiency. Existing contact-tolerant motion planning (CTMP) methods rely on indirect spatial representations (e.g., prebuilt map, obstacle set), resulting in inaccuracies and a lack of adaptiveness to environmental uncertainties. To address this issue, we propose a direct contact-tolerant (DCT) planner, which integrates vision–language models (VLMs) into direct point perception and navigation using two key innovations. The first one is VLM point cloud partitioner (VPP), which performs contact-tolerance reasoning in image space using VLM, caches the inference outputs as short-memory masks, propagates the mask across frames using odometry, and projects them onto the current scan frame to obtain a contact-aware point cloud (partitioned into contact-tolerant and contact-intolerant points). The second innovation is VLM guided navigation (VGN), which formulates CTMP as a perception-to-control optimization problem under direct contact-aware point cloud constraints, which is further solved by a deep unfolded neural network (DUNN). We implement DCT in Isaac simulation and a real car-like robot, demonstrating that DCT achieves robust and efficient navigation in cluttered environments with movable obstacles, outperforming representative baselines across diverse metrics. |

All are welcome!

For enquiries: Tel: 8822 9159

Email: frankielei@um.edu.mo

Best Regards,

State Key Laboratory of Internet of Things for Smart City