Register now! FSS Computational Social Sciences Workshop: From Job Descriptions to Occupations: Using Neural Language Models to Code Job Data

請即登記 | 計算社會科學工作坊:宋曦教授&徐家匯女士,25/9/2024 (三) @E21B-G016

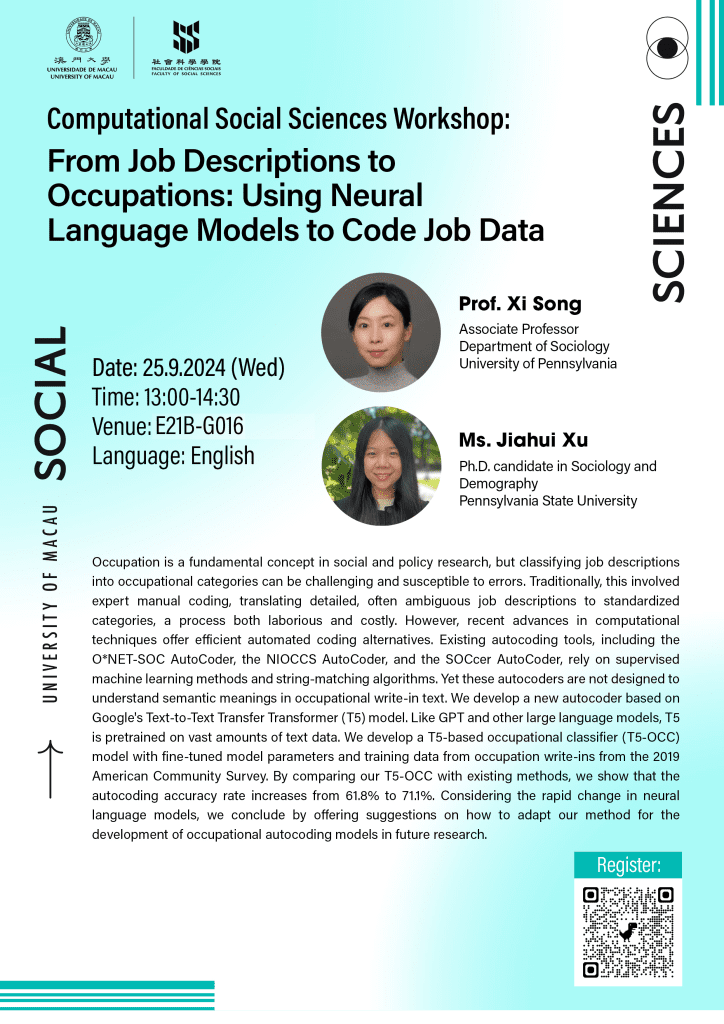

Computational Social Sciences Workshop: From Job Descriptions to Occupations: Using Neural Language Models to Code Job Data

Speaker: Xi SONG, Associate Professor, Department of Sociology, University of Pennsylvania

Jiahui XU, Ph.D. candidate in Sociology and Demography, Pennsylvania State University

Date: 25 September 2024 (Wed)

Time: 13:00 – 14:30

Venue: E21B-G016

Register: https://forms.gle/fqoeqebeP2AAAgGSA

Abstract:

Occupation is a fundamental concept in social and policy research, but classifying job descriptions into occupational categories can be challenging and susceptible to errors. Traditionally, this involved expert manual coding, translating detailed, often ambiguous job descriptions to standardized categories, a process both laborious and costly. However, recent advances in computational techniques offer efficient automated coding alternatives. Existing autocoding tools, including the O*NET-SOC AutoCoder, the NIOCCS AutoCoder, and the SOCcer AutoCoder, rely on supervised machine learning methods and string-matching algorithms. Yet these autocoders are not designed to understand semantic meanings in occupational write-in text. We develop a new autocoder based on Google’s Text-to-Text Transfer Transformer (T5) model. Like GPT and other large language models, T5 is pretrained on vast amounts of text data. We develop a T5-based occupational classifier (T5-OCC) model with fine-tuned model parameters and training data from occupation write-ins from the 2019 American Community Survey. By comparing our T5-OCC with existing methods, we show that the autocoding accuracy rate increases from 61.8% to 71.1%. Considering the rapid change in neural language models, we conclude by offering suggestions on how to adapt our method for the development of occupational autocoding models in future research.

關注我們:

|

|

|

|