IOTSC Postgraduate Forum: Intelligent Transportation

智慧城市物聯網研究生論壇: 智能交通

Dear Colleagues and Students,

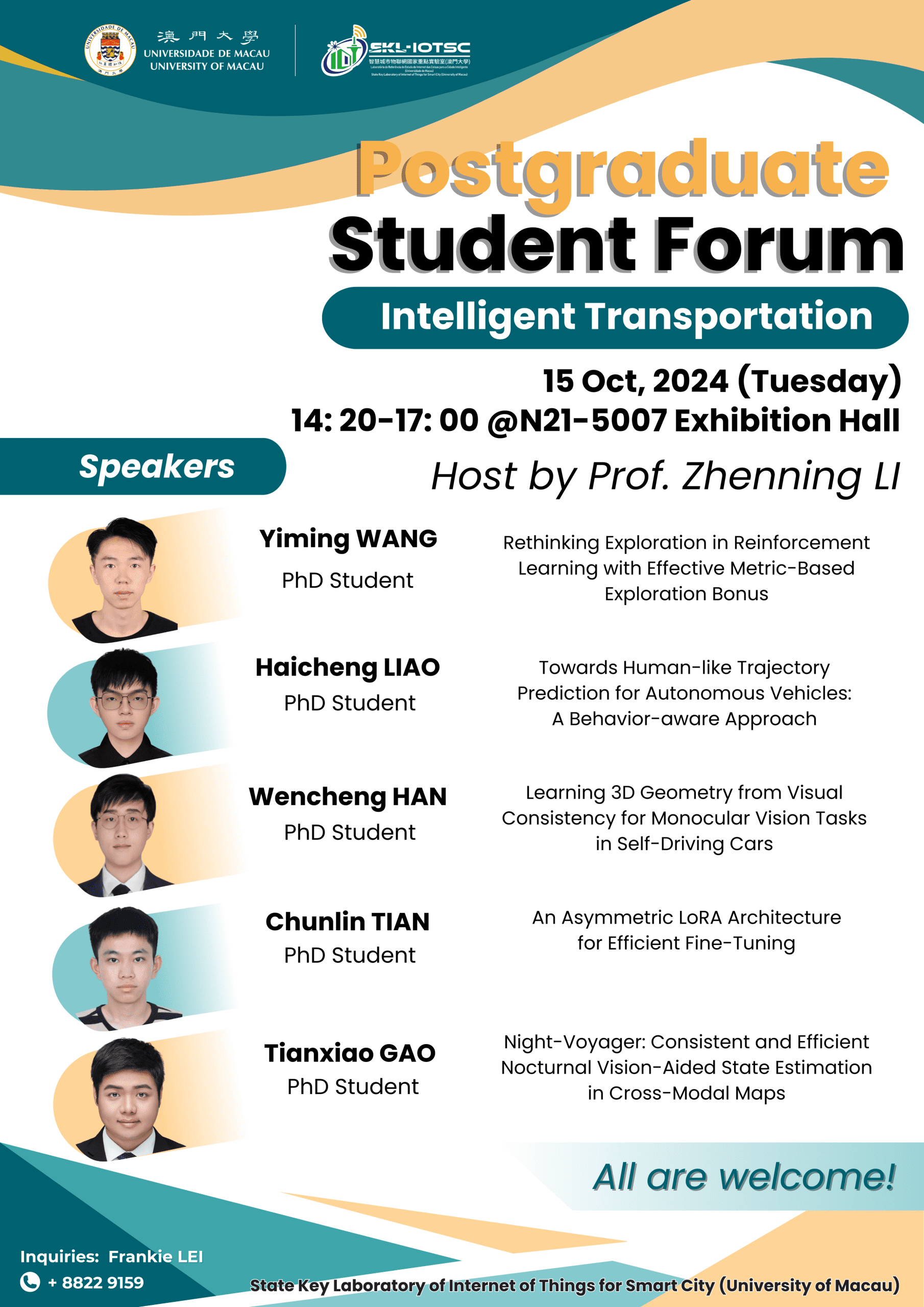

The State Key Laboratory of Internet of Things for Smart City would like to invite you to join our “IOTSC Postgraduate Forum” on 15/10/2024 (Tuesday). The event aims to bring together postgraduate students from various disciplines to share their research, exchange ideas, and engage in meaningful discussions. We are pleased to invite six outstanding PhD students to give presentations related to Intelligent Transportation.

IOTSC Postgraduate Forum: Intelligent Transportation

Date: 15/10/2024 (Tuesday)

Time: 14:20 – 17:00

Language: English

Venue: N21-5007 (Exhibition Hall)

Host: Prof. Zhenning LI

| Presenters | Abstract |

| Yiming WANG |

Rethinking Exploration in Reinforcement Learning with Effective Metric-Based Exploration Bonus Enhancing exploration in reinforcement learning (RL) through the incorporation of intrinsic rewards, specifically by leveraging state discrepancy measures within various metric spaces as exploration bonuses, has emerged as a prevalent strategy to encourage agents to visit novel states. The critical factor lies in how to quantify the difference between adjacent states as novelty for promoting effective exploration. Nonetheless, existing methods that evaluate state discrepancy in the latent space under or norm often depend on count-based episodic terms as scaling factors for exploration bonuses, significantly limiting their scalability. Additionally, methods that utilize the bisimulation metric for evaluating state discrepancies face a theory-practice gap due to improper approximations in metric learning, particularly struggling with hard exploration tasks. To overcome these challenges, we introduce the Effective Metric-based Exploration-bonus (EME). EME critically examines and addresses the inherent limitations and approximation inaccuracies of current metric-based state discrepancy methods for exploration, proposing a robust metric for state discrepancy evaluation backed by comprehensive theoretical analysis. Furthermore, we propose the diversity-enhanced scaling factor integrated into the exploration bonus to be dynamically adjusted by the variance of prediction from an ensemble of reward models, thereby enhancing exploration effectiveness in particularly challenging scenarios. Extensive experiments are conducted on hard exploration tasks within Atari games, Minigrid, Robosuite, and Habitat, which illustrate our method’s scalability to various scenarios, including pixel-based observations, continuous control tasks, and simulations of realistic environments. |

| Haicheng LIAO |

Towards Human-like Trajectory Prediction for Autonomous Vehicles: A Behavior-aware Approach Accurately predicting the trajectories of surrounding vehicles is essential for safe and efficient autonomous driving. This paper introduces a novel behavior-aware trajectory prediction model (BAT) that incorporates insights from traffic psychology, human behavior, and decision-making. BAT seamlessly integrates four modules: behavior-aware, interaction-aware, priority-aware, and position-aware. These modules perceive and understand underlying interactions and account for uncertainty and variability in prediction, enabling higher-level learning and flexibility without rigid categorization of driving behavior. Importantly, BAT eliminates the need for manual labeling in the training process and addresses the challenges of non-continuous behavior labeling and the selection of appropriate time windows. Furthermore, we evaluate BAT’s performance across the Next Generation Simulation (NGSIM), Highway Drone (HighD), Roundabout Drone (RounD), and Macao Connected Autonomous Driving (MoCAD) datasets, showcasing its superiority over prevailing state-of-the-art (SOTA) benchmarks in terms of prediction accuracy and efficiency. Remarkably, even when trained on reduced portions of the training data (25%) and with a much smaller number of parameters, BAT outperforms most of the baselines, demonstrating its robustness and efficiency in challenging traffic scenarios, including highways, roundabouts, campuses, and busy urban locales. This underlines its potential to reduce the amount of data required to train autonomous vehicles, especially in corner cases. In conclusion, the behavior-aware model represents a significant advancement in the development of autonomous vehicles capable of predicting trajectories with the same level of proficiency as human drivers. |

| Wencheng HAN |

Learning 3D Geometry from Visual Consistency for Monocular Vision Tasks in Self-Driving Cars Monocular vision tasks, like depth estimation and 3D object detection in self-driving cars, face challenges due to the limited 3D information in 2D images. This highlights our recent achievements that leverage visual consistency to enhance monocular vision models. We first introduce the Direction-aware Cumulative Convolution Network (DaCCN) for self-supervised depth estimation. DaCCN addresses direction sensitivity and environmental dependency, improving feature representation and achieving state-of-the-art performance. Next, we present the Rich-resource Prior Depth estimator (RPrDepth), which uses rich-resource data as prior information. RPrDepth estimates depth from a single low-resolution image by referencing pre-extracted features. Finally, we discuss a weakly-supervised approach for monocular 3D object detection that relies only on 2D labels. By using spatial and temporal view consistency, this method achieves results comparable to fully supervised models and enhances performance with minimal labeled data. |

| Chunlin TIAN |

An Asymmetric LoRA Architecture for Efficient Fine-Tuning Adapting Large Language Models (LLMs) to new tasks through fine-tuning has been made more efficient by the introduction of Parameter-Efficient Fine-Tuning (PEFT) techniques, such as LoRA. However, these methods often underperform compared to full fine-tuning, particularly in scenarios involving complex datasets. This issue becomes even more pronounced in complex domains, highlighting the need for improved PEFT approaches that can achieve better performance. Through a series of experiments, we have uncovered two critical insights that shed light on the training and parameter inefficiency of LoRA. Building on these insights, we have developed HydraLoRA, a LoRA framework with an asymmetric structure that eliminates the need for domain expertise. Our experiments demonstrate that HydraLoRA outperforms other PEFT approaches, even those that rely on domain knowledge during the training and inference phases. |

| Tianxiao GAO |

Night-Voyager: Consistent and Efficient Nocturnal Vision-Aided State Estimation in Cross-Modal Maps Accurate and robust state estimation at nighttime is essential for autonomous navigation of mobile robots to achieve nocturnal or round-the-clock tasks. An intuitive and practical question arises: Can low-cost standard cameras be exploited for nocturnal state estimation? Regrettably, most current visual methods tend to fail due to adverse illumination conditions, even with active lighting or image enhancement. A crucial insight, however, is that the streetlights in most urban scenes can provide static and salient visual information at night. This inspires us to design an object-level nocturnal vision-aided state estimation framework, named Night-Voyager, which leverages cross-modal maps and keypoints to enable the versatile all-day localization. Night-Voyager starts with a fast initialization module which solves the global localization problem. With the effective two-stage cross-modal data association approach, the system state can be accurately updated by the map-based observations. Meanwhile, to address the challenge of large uncertainties in visual observations during nighttime, a novel matrix Lie group formulation and a feature-decoupled multi-state invariant filter are proposed for consistent and efficient state estimation. Comprehensive experiments on both simulation and diverse real-world scenarios (about 12.3 km total distance) showcase the effectiveness, robustness, and efficiency of Night-Voyager, filling a critical gap in nocturnal vision-aided state estimation. |

For enquiries: Tel: 8822 9159

Email: frankielei@um.edu.mo

Best Regards,

State Key Laboratory of Internet of Things for Smart City